|

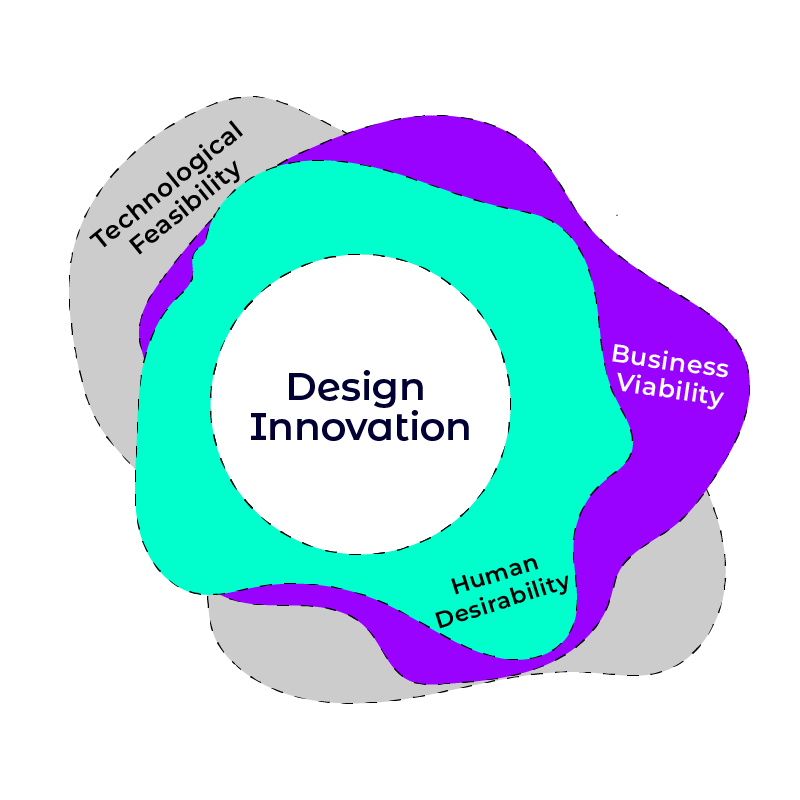

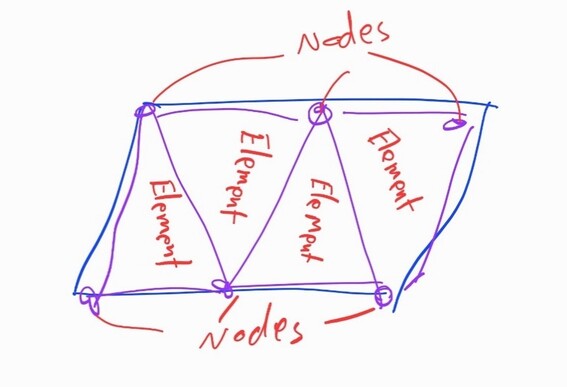

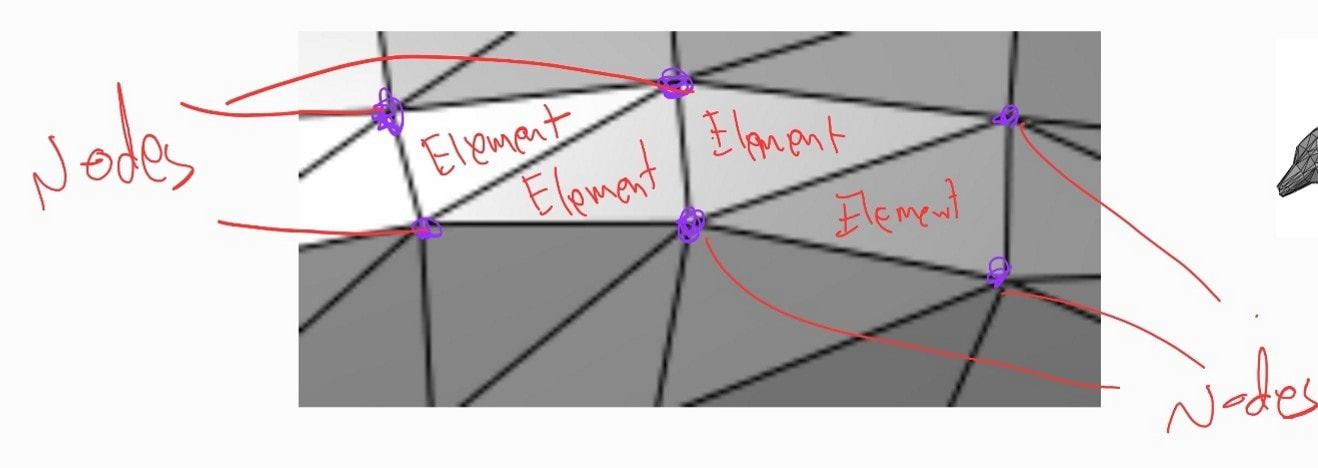

In today's world, design and technology are increasingly intertwined, leading to more projects that combine the two. This convergence has created innovative fields that merge creativity with technical expertise, pushing the boundaries of what's possible. One field that showcases this blend is data visualisation, which has become a powerful tool for visually communicating complex information. However, to learn more about the topic, it's essential to understand the origins of data visualisation. Data visualisation has become a crucial skill within the broader field of data science, evolving from a complementary technique to an essential component of the data science toolkit. The growth of data visualisation in data science is supported by two key factors: the increasing quality and quantity of datasets and the advancement of technological platforms supporting visualisation. Although the history of data visualisation dates back centuries, early examples can be found in maps and astronomical charts. Then, a more modern form began in the 17th century when statistical data was first presented graphically. Since then, data visualisation has progressed significantly, adapting to new technologies and data types, specifically digital data, in recent years. As our digital world generates unprecedented volumes of data, effectively presenting this information has become critical across industries and disciplines. This has led to a surge in the popularity of data visualisation skills within the data science community, with professionals recognizing its importance in extracting and communicating meaningful insights from complex datasets. The Power of Visual Processing Besides the apparent supporting factors contributing to the growth of data visualisation, such as advancements in technology and the increasing quality of datasets, what makes visual data inherently a more exciting approach to presenting information? Visual information is significantly more straightforward to digest and consume than raw numbers or text. When data is presented visually, it often reveals more of the story hidden within the numbers. This is because our brains are wired to process visual information more quickly and efficiently according to MIT neuroscientists that found out that the brain can identify images seen for as little as 13 milliseconds. Data was being visualized by showing a list of connected items, their relationships, and the details within these connections. For example, the work of Barabási Lab in network science demonstrates how complex systems can be visualized by the “Hidden Patterns” exhibition. Their visualisations of social networks, biological systems, and technological networks have uncovered intricate relationships that were not apparent in the raw data. These visualisations allow researchers and viewers to grasp complex concepts and relationships at a glance. “150 years of Nature” by Barabási Lab in the Hidden Pattern Exhibition visualising the connection of papers’ co-citation network The Art of Creating Compelling Visualisations Creating compelling data visualisations requires careful consideration of the targeted response from the audience. Choosing the correct type of visualisation for the data and the story you want to tell is essential. This involves understanding your audience, selecting appropriate mediums, and ensuring the visualisation is accurate and easy to interpret. The goal is to create a visual representation that presents the data, engages the viewer, and guides them toward the intended insights. Edward Tufte, a pioneer in data visualisation, emphasizes the importance of "graphical excellence," which involves presenting complex ideas with clarity, precision, and efficiency. His principles have guided many data scientists and designers in creating visualisations that are not only informative but also aesthetically pleasing. At its core, data visualisation is a form of storytelling. Artists like Refik Anadol and Aaron Koblin have pushed the boundaries of data visualisation, creating immersive and interactive experiences that tell compelling stories through data. Their work demonstrates how data visualisation can be both informative and emotionally engaging, turning abstract numbers into narratives that resonate with viewers on a personal level. For instance, Refik Anadol's "Melting Memories" project uses brainwave data to create stunning visual art, exploring the intersection of memory and technology. Aaron Koblin's "Flight Patterns" visualizes air traffic data, transforming mundane flight paths into mesmerizing patterns that highlight the complexity and beauty of global travel. “Melting Memories” by Refik Anadol (2018) visualising the human neuro-mechanism “Flight Pattern” by Aaron Koblin (2005) visualising the air traffic over North America

|

AuthorThe following blogs are written by TforDesign team and community members. Categories

All

|

© 2013 - 2024 TforDesign. All rights reserved.

Terms & Conditions | Privacy Policy | Cookie Policy | Sitemap

Terms & Conditions | Privacy Policy | Cookie Policy | Sitemap

RSS Feed

RSS Feed